Safeguarding the future: AI’s the top priority this Cybersecurity Awareness Month 2023

Recently we held an outrageous event for our SebDB community. As per usual, it was lively and thought-provoking. (N.B.: You can join the fun here.)

We asked the community what was on their minds, and what they wanted more information about.

On this occasion, one concern came to the surface in a big way.

AI—specifically the challenges it posed on the cybersecurity landscape.

Now, bear in mind, these voices weren’t your average person either. They were all people who were well versed—and deeply invested—in security awareness, and human cyber risk management.

These folks had AI on their minds. And so do you. (The fact you’re reading this is a major giveaway, NGL.)

October is National Cybersecurity Awareness Month

2023 marks 20 years since the very first National Cybersecurity Awareness Month. What better time to tackle the pressing topic of AI?

As we write this, summer’s in full swing. But before we know it, we’ll be entering official sweater weather. The time for planning is right now.

If you’re reading this, chances are you and your teammates bear the weight of your organization’s security on your shoulders. You may be scrambling for some new Cybersecurity Awareness Month ideas.

Picture this: All in one month, you succeed in:

Getting AI-related risks on everyone’s radar

Influencing security behaviors

Making everyone in your organization feel good about cybersecurity

Don’t get us wrong, this isn’t about AI fear-mongering (we’ll leave that to the tabloids and LinkedIn “influencers”). The reality is: There’s a lot of nuance in the sphere of artificial intelligence and cybersecurity opportunities and challenges.

If AI’s a new car, you’re not trying to make everyone a F1 driver. Mostly you’re teaching people how to cross the road safely.

Your job is preparing people for the potential vulnerabilities AI introduces. It’s preventing AI-related misery from darkening your organization’s door.

Artificial intelligence is a growing threat to organizations

There’s no denying AI is blazing a trail and making headlines. The tools are becoming a part of daily workplace tasks (whether you want them to or not), like research, writing, data analysis.

But as its use grows, so do the threats. Let’s talk about two of the biggest concerns.

Risky behavior change: People are sharing sensitive information with AI tools.

We conducted a recent study and found that 64% of US office workers have entered work information into a generative AI tool. A further 28% weren’t sure if they have.

An alarming 93% of people asked were potentially sharing confidential information with artificial intelligence tools.

Why? For one thing, the tools can be very convincing. It can be hard to tell whether the information they’re generating is real. Further, people feel they can trust the tools as they’re often seen as being more objective than people.

But sharing sensitive information with artificial intelligence tools poses significant risks. For example, it could lead to data leaks, which in turn can lead to financial losses, reputational damage, even legal action. Then there’s the way it can make the person who shared the information prone to phishing attacks.

Incoming attacks: Artificial intelligence is making phishing attacks more convincing

Another key concern is that people, on the whole, struggle to tell the difference between text written by a human versus text written by artificial intelligence.

A recent study by the University of Kent found over 60% of people (in both the UK and US) aren’t convinced of their abilities in this area.

This means people are prone to AI-led cyber attacks. And when you take into account AI tools are being used to create ever more convincing phishing messages, it’s clear the issue needs addressing, and fast.

How exactly can you leverage CSAM’s spotlight to boost AI savviness? Let’s run through some ideas.

The AI cybersecurity awareness month curriculum: Make your people AI-aware in 2023 and beyond

AI-aware does not equal AI-overwhelmed. So we’ve kept it simple with three key messages as a starting point.

(Side note: Customize your approach for optimal impact—you know your organization.)

Message 1: AI-generated content is becoming more and more sophisticated. Be skeptical of anything that seems too good to be true. If you’re not sure, ask your cybersecurity team for help.

Bring it to life:

-

- Provide training on how to detect AI-generated content. Teach people how to spot the signs of a fake, and how to protect themselves from being tricked.

- Use real-world examples. Share examples of AI-generated content that has been used in phishing scams or other attacks. This will help people understand the risks and be more likely to spot them.

Message 2: If you’re ever unsure about whether or not something is AI-generated, just ask your cybersecurity team. They’re always happy to help.

Bring it to life:

-

- Make it easy for people to get help. Provide clear and concise instructions on how to contact the cybersecurity team.

- Be responsive. Make sure that the cybersecurity team is responsive to people’s questions and concerns.

- Build trust. Make sure that people trust the cybersecurity team and feel comfortable reaching out for help.

Message 3: AI can be a powerful tool for boosting productivity, but it’s important to remember security comes first. Let’s make sure we’re using AI responsibly by following all the security protocols.

Bring it to life:

-

- Run sessions that focus on the risks associated with AI-driven data leaks. Provide people with clear and concise information about the risks, and how they can protect themselves.

- Be clear about the consequences of data breaches. Explain the potential consequences of a data breach, both for the organization and for the individuals involved.

- Incorporate AI security protocols into existing processes. This will help people understand the importance of security and make it easier for them to follow the protocols.

- Regularly update the security protocols. This will help ensure that the protocols are up-to-date and that people are aware of the latest threats.

- Emphasize the importance of security awareness. Remind people that security is everyone’s responsibility and that they should take steps to protect themselves and the organization.

There you have it–a solid foundation to help your people navigate AI-related risks.

Next, meet the toolkit that’ll elevate your Cybersecurity Awareness Month efforts to new heights.

Rise above the AI risks: Supercharge your organization’s resilience with our Security Awareness toolkit

AI’s influence on cybersecurity grows daily. You need to throw everything at building a resilient security posture. Lucky for you, our Cybersecurity Awareness Month toolkit can supercharge your CSAM efforts, including the success of your AI education.

Here’s what we’re packing:

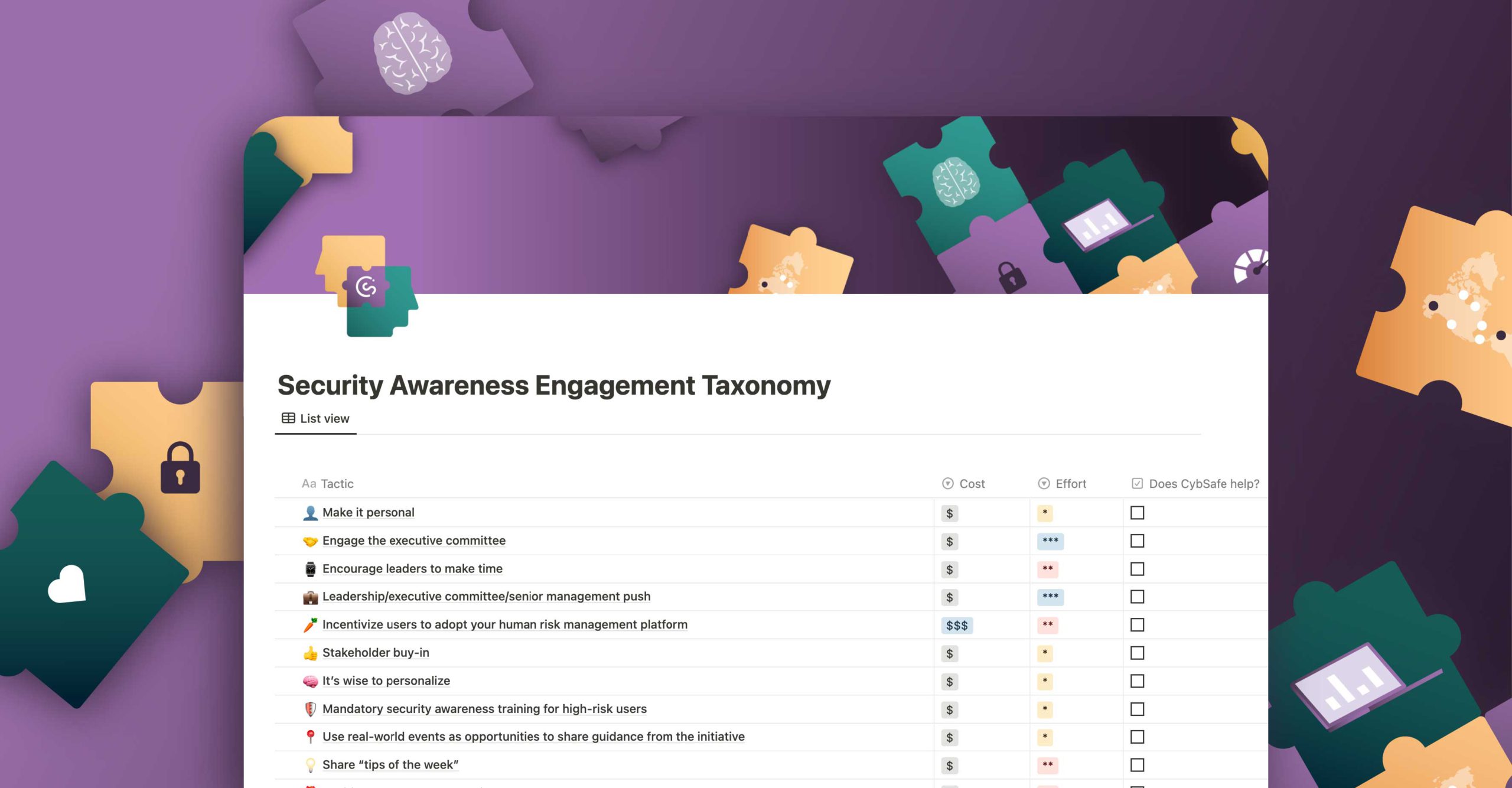

Security Awareness Engagement Taxonomy

A collection of 30+ approaches to boost security awareness engagement created by our Science & Research team, supported by security professionals in the SebDB Community.

Organized by cost, tactic type, and effort, it helps you use best practice methods to ensure everyone understands how important they are in keeping your organization secure.

![CYBSAFE-security awareness toolkit-230815MS [Recovered]-09 the ransomware toolkit cover](https://cdn.www.cybsafe.com/wp-content/uploads/2023/08/CYBSAFE-security-awareness-toolkit-230815MS-Recovered-09.jpg)

Free, accredited security awareness training modules

Get a flavor of how we do it here at CybSafe. Exclusive to the toolkit, we’re giving you access to FIVE modules from our platform, for free!

Passphrases

Are your people still using weak, easily guessed passphrases? (Spoiler: Yep.) This module can help to change that!

It helps people to use strong, unique passphrases that will keep your systems safe from cybercriminals.

Protecting your devices

Your people are your biggest target for cybercriminals. And the more devices they use, the better it is for the bad guys.

This device security module will help you keep your people’s devices safe from malware, phishing attacks, and other threats.

Spotting fake emails, featuring James Linton

Phishing emails are a major threat to businesses of all sizes.

That’s why we collaborated with expert James Linton, social engineer and email prankster extraordinaire. This is a module that’ll make your people into laser-eyed fake-email-spotting pros.

Sophisticated attacks

We know cybercriminals are constantly evolving their attacks.

This module will help get your people prepared for anything. They’ll know how to identify and defend against the latest threats. Costly cyber incident averted!

Are you really a target?

Everyone is a potential target for cybercrime.

But this module enables people to assess their risk and take steps to protect themselves. So you can sleep soundly at night, knowing that your organization is much safer.

![CYBSAFE-security awareness toolkit-230815MS [Recovered]-10 The nudge toolkit](https://cdn.www.cybsafe.com/wp-content/uploads/2023/08/CYBSAFE-security-awareness-toolkit-230815MS-Recovered-10.jpg)

Webinar: 30+ proven ways to increase security awareness engagement

Human-related security incidents continue to plague organizations of all kinds.

But ask yourself, how many tactics are you using to drive engagement? If you can count them on one hand, it’s time to put aside an hour to listen to this.

In this on-demand webinar, we asked leading industry voices from Meta, New York Life and Raytheon Technologies to help us identify how to create a culture of security awareness in your organization.

![CYBSAFE-security awareness toolkit-230815MS [Recovered]-11](https://cdn.www.cybsafe.com/wp-content/uploads/2023/08/CYBSAFE-security-awareness-toolkit-230815MS-Recovered-11.jpg)

Blog: 23 cybersecurity awareness month ideas for 2023

We show you how to use behavioral science to:

- supercharge your awareness efforts

- maximize impact

- create lasting change in people’s habits.

We also share some tips from innovative organizations who made a splash—and had fun—with their CAM campaigns.

AI risk mitigation demands a proactive, comprehensive approach.

AI isn’t going anywhere. It’s here to stay, revolutionizing the way we live and work.

That means you can’t just hide out and wait for this AI thing to blow over. Your people need upskilling and educating to stay on AI’s good side.

Cybersecurity Awareness Month is a golden opportunity to drive home the most pertinent points that will keep them and our organization safe.

By influencing behaviors and educating people, you can elevate your organization’s cyber resilience to new heights.

When it comes to cybersecurity resilience, you need the right ally by your side. CybSafe’s science-based approach and cutting-edge resources empower our clients to navigate the AI landscape with confidence. And they’ve been thrilled with the experience—and the results.

How did their journey begin? They booked a demo. You can book one too, right here.

So, seize this Cybersecurity Awareness Month with gusto, and make it relevant to today’s landscape. Here’s to making it an AI-mazing experience!

BONUS CONTENT: Real-world cybersecurity mishaps

AI awry: Real-world cybersecurity mishaps

Let’s take a look at some real-world AI cybersecurity incidents. You can even use these to illustrate the dangers to your organization’s people.

Fake news bots spread lies: In 2019, hackers used AI to create fake news articles that were indistinguishable from real ones. These articles were then used to spread misinformation and propaganda. This type of attack can have a significant impact on organizations. For example, if a fake news article claimed that a company’s product was dangerous, this could lead to a loss of sales and reputational damage.

Facial recognition duped by a feline: In 2020, researchers at Cognitec Systems fooled a facial recognition AI into thinking that a picture of a cat was a picture of a human. This shows that AI can be fooled by simple tricks, which could be used to gain access to secure systems. For example, if a threat actor were to be able to fool a facial recognition system into thinking that they were an authorized user, they could then access sensitive data or systems.

AI emails scammed millions: In 2021, a group of hackers used AI to break into a major bank’s security system. They used AI-generated emails to fool the bank’s employees into clicking on malicious links. This resulted in the theft of millions of dollars from the bank.

Chatbot superspreads COVID lies: In January 2022, researchers at the University of Oxford found that an AI-powered chatbot was being used to spread COVID-19 misinformation on social media. The chatbot was able to generate realistic-looking text that appeared to be from real people, and it was used to spread false claims about the pandemic. This could have led to people making uninformed decisions about their health, which could have had a negative impact on public health.

Deepfake dupes CEO, company loses millions: In February 2022, a CEO of a major pharmaceutical company was impersonated in a fraudulent Zoom call. The caller used an AI-powered deepfake to make it appear as if the CEO was actually on the call, and they were able to convince the company to wire them $2 million. This vector risks a significant financial loss, and it could also damage any organization’s reputation.

AI malware data theft leaves retailer’s reputation in tatters: In March 2022, a major retailer was targeted by a group of hackers who used AI-powered malware to steal customer data. The malware was able to bypass security systems and steal the personal information of millions of customers. This could be used by criminals to commit identity theft or other crimes. It could also cause reputational damage.